AI Weave is creating the foundation for the AI-driven future, ensuring the technology of tomorrow can propel our world forward.

Quality Service ✨ Guaranteed

AI Weave provides everything you need to build scalable AI solutions—from robust inference and AI/ML ops tools to flexible access to top-tier GPUs.

AI Weave Inference Engine gives developers the speed and scalability they need to run AI models with dedicated inferencing optimized for ultra-low latency and maximum efficiency.

Reduce costs and boost performance at every stage with the ability to deploy models instantly, auto-scale workloads to meet demand, and deliver faster, more reliable AI predictions.

+

Professionals Team+

Years of Average Experience+

Successful Projects DeliveredFully dedicated bare metal servers with native cloud integration, at the best price.

Optimize GPU performance with seamless workload orchestration.

Boost AI performance with ultra-fast DeepSeek R1 & Llama 3 inference.

Get assistance from our team of GPU specialists whenever needed.

Blueket overcomes challenges, achieves results, and adds value to our clients and partners. Take a look at some of our clients' success stories. Take a look at some of our clients' success stories.

Together, we redefine industry standards by combining your domain expertise with our robust AI/ML ops tools, sovereign cloud capabilities, and hyper-low-latency networking. Whether you're a tech innovator, systems integrator, or enterprise leader, let’s co-create the next generation of AI-driven transformation.

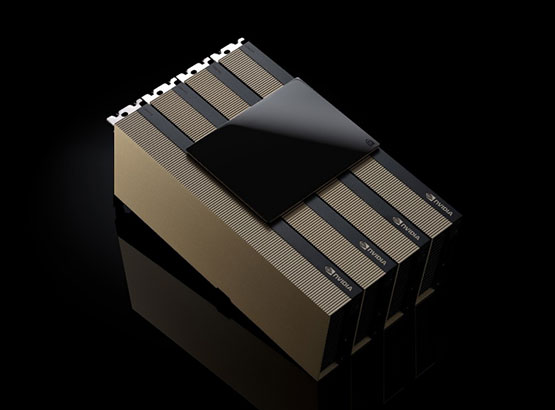

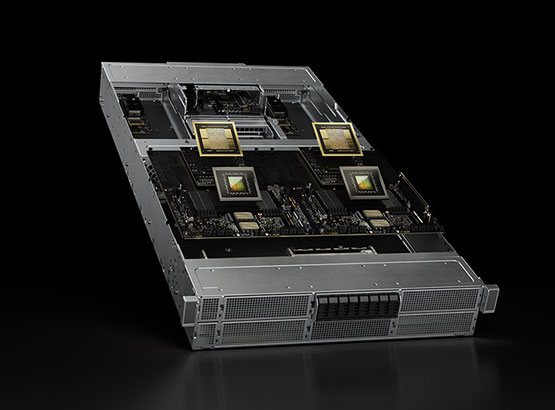

Train with the NVIDIA® H200 GPU cluster with Quantum-2 InfiniBand networking.

Experience cutting-edge advancements in AI and HPC with the NVIDIA H200 GPU, ideal for demanding AI models and intensive computing applications.

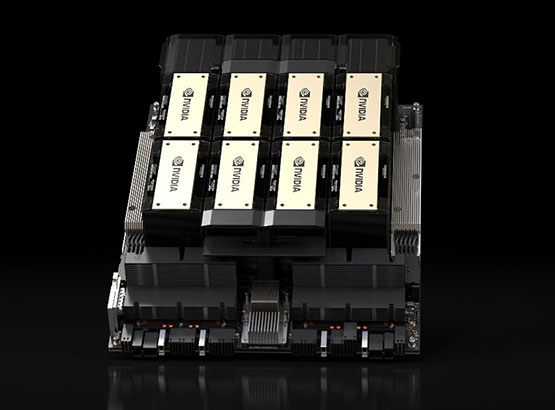

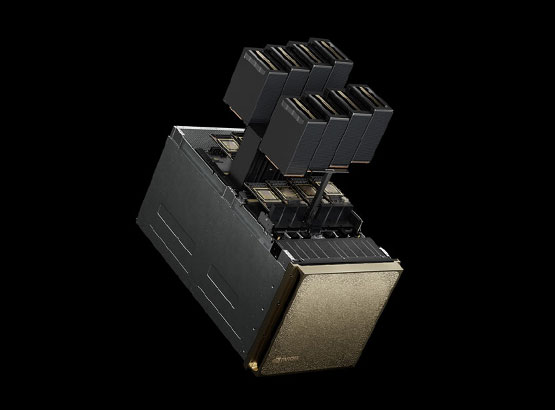

Powered by dual Blackwell GPUs and NVIDIA’s NVLink® interconnect, the GB200 NVL72 is purpose-built to handle massive AI workloads, offering seamless integration into existing infrastructures through NVIDIA’s scalable MGX™ architecture.

Future-Proof Your AI with Blackwell Cloud and the GB200 NVL72.

Blackwell Cloud provides access to the NVIDIA HGX™ B200, purpose-built to accelerate large-scale AI and HPC workloads. With up to 1.5TB (192 GB per GPU*8) memory and support for FP8 and FP4 precision, users can access faster training and inference of advanced models across NLP, computer vision, and generative AI domains.

AI Weave exists to bring your boldest AI ambitions to life. We provide the infrastructure, expertise, and full-stack platform to help you build, deploy, and scale AI without limits.

AI offers transformative potential — but it 's complex. That 's why we partner with startups and enterprises alike to simplify the journey, accelerate innovation, and unlock growth. More than a provider, we 're your AI infrastructure partner in a rapidly evolving world.

NVIDIA H100

NVIDIA H200

NVIDIA B200

Read more about how Blueket works and how it can help you.

Begin a Quick Discussion

Marketing@AIweave.comAI Weave operates data centers worldwide, ensuring low latency and high availability for your AI workloads.

Get Started